An artificial intelligence to listen to the pictures

What does Gustav Klimt's 'The Kiss' sound like? What kind of sound torment arises from Francisco de Goya's 'Saturn devouring a son'?

Related Pictures don't have music, we might think. They are silent and merely visual works. The posture of the lovers and the warm colors convey the tenderness and love of Klimt's work. Saturn's expression and the darkness horrify us in Goya's painting. As much as we try to put it into words, there is nothing like being able to see the play.

Until now, blind or visually impaired people were largely deprived of the feelings that painting generates. However, thanks to an artificial intelligence model and the ingenuity of five young people, this discrimination could have its days numbered. They have created a technology that allows to extract the feelings of a painting and transform them into music. They have made it possible for people who cannot see to hear the painting.

Mathematics that see, mathematics that compose

The idea of creating an artificial intelligence capable of helping blind people to enjoy painting arose from a deep learning course organized in Madrid by Saturdays AI. At the end, a project had to be developed that would demonstrate what each of the participants had learned.

“Those of us from the team met and decided that we could use the opportunity to do something useful and generate a positive impact,” explains Guillermo García, a student of the Double Degree in Mathematics and Computer Science at the Autonomous University of Madrid. “The first idea was an AI to compose music. We thought it was fun, but we were missing the point of utility. After thinking about it a lot and discussing it with the family, my girlfriend came up with the idea that we could put the composition together with image analysis to help blind people hear the paintings.”

Together with Guillermo García, behind the project are Miguel Ángel Reina, data analyst, Diego Martín, data scientist and aerospace engineer, Sara Señorís, physicist and data scientist, and Borja Menéndez, doctor in operational research. Together they came up with a model that is actually two artificial intelligences: a convolutional neural network and a generative adversarial network or GAN. They had the name of their technology on a platter: GANs N' Roses.

“The logic behind the process is divided into two parts. The first thing we have is a model trained to detect sentiment in a chart and inform us of its distribution. In other words, this is a painting with 50% happiness, 30% love and 20% sadness”, explains Guillermo García. This is where the convolutional neural network comes into play. This functions in some ways in a similar way to neurons in the primary visual cortex of the brain.

This network was trained with The Wikiart Emotions Dataset, which includes more than 4,000 works from different styles of the Western pictorial tradition. From there, they created a model of the distribution of emotions in each frame, something they could use to give directions to the second artificial intelligence, the GAN.

“This second model works almost like a human composer. You need some inspiration from which to unleash his creativity. We use the sentiment distribution of the first model to select an existing piece of music that best fits that distribution. Once selected, we give the first five seconds to the composer model. And this generates a unique and original piece of two or three minutes”, adds Guillermo García.

Thus, broadly speaking, the GANs N' Roses model consists of an AI capable of analyzing a frame and translating it into a distribution of feelings and a platform that allows an existing song to be selected based on that distribution to inspire the director of orchestra, an artificial intelligence that is capable of composing something new based on what it has heard.

A new way to experience art

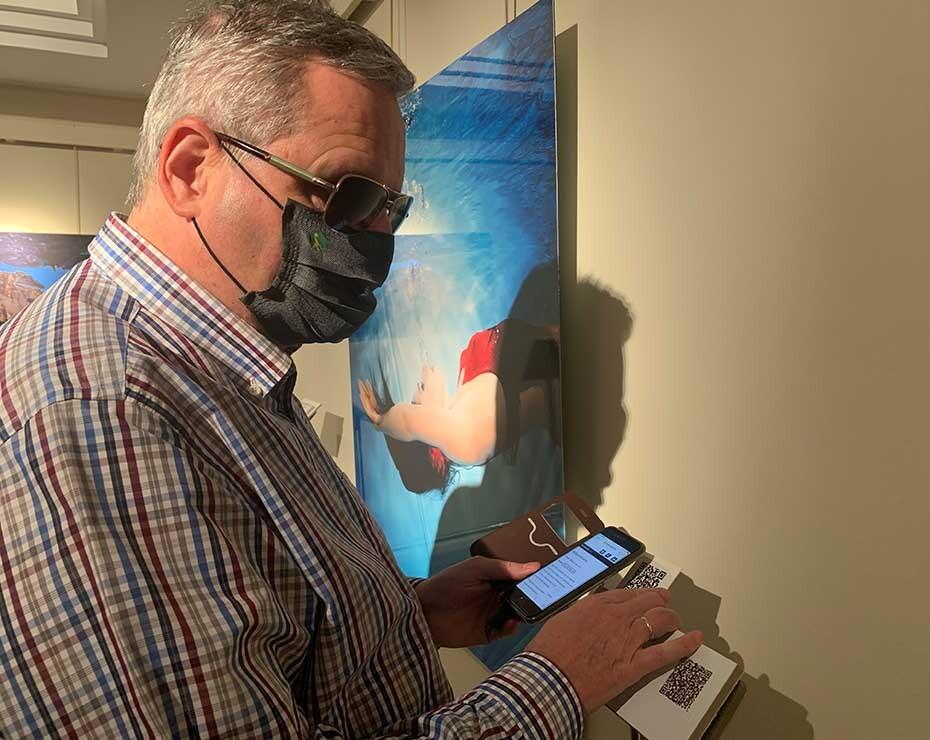

The result can now be seen and heard live. Since last October, the ONCE Typhlological Museum in Madrid has exhibited a series of 11 paintings that can be heard. Thanks to GANs N' Roses, museum visitors can not only listen to a textual description of the painting (an increasingly common accessibility measure in museums), but also listen to music created by an algorithm to convey the emotions of the painting.

The reception has been so good that the ONCE museum has decided to extend the exhibition. It was intended to last a month and will finally remain open until January 15. “From what some blind users have said, they can now experience the frames in a more complete way. The exhibits may have an audio description of the painting's components and techniques, but that doesn't stir your feelings. If we add music, things change”, explains Guillermo García.

Looking to the future, they would like to bring their new technology to other museums with greater impact, but where the accessibility of the works is not always a priority. "In addition, we believe that it not only serves to help blind people, but that it can create a new way of experiencing art for everyone," adds García.

In fact, a new idea is already taking shape in his mind: to explore the reverse path. “Achieving a model that converts music into a painting. Both to create a new artistic experience and to help deaf people see music.”

In Nobbot | Artificial emotional intelligence: what machines can never learn

Images | ELEVEN, loaned by Guillermo García

Related