News

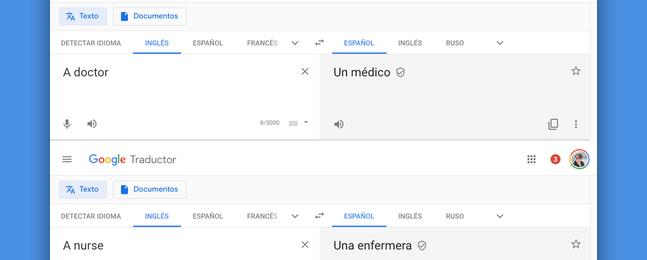

Any user can test these cases or others in the Google translator with the application or from the search engine. Although it is a significant case, it is one more example of many other biases that have been found in artificial intelligence algorithms and systems that learn from examples created by society and recreate human errors.

Google has explained to Xataka that "Google Translate works by learning patterns from millions of translation examples that appear on the web. Unfortunately, this means that the model can unintentionally replicate existing gender biases." The company is working on updating the product to correct those prejudices that could already be found in 2017.

This would explain why a more egalitarian translation is offered in English, as it has a greater variety of examples and is the first one that developers usually work with. "We look forward to bringing the experience to more features and starting to address non-binary gender in translations," Google says.